Case Study: RAG-based natural language question response

2024/04/11 | Written By: YoungHoon JeonRetrieval-Augmented Generation (RAG) denotes a language paradigm encompassing processes and technologies for generating responses by incorporating search results. Within Large Language Models (LLMs), it serves to enhance response accuracy by integrating external data sources. Upon user prompt input, a query is initiated within the knowledge database, and subsequent prompt augmentation is conducted through search result integration. This augmentation amalgamates system and user prompts alongside external knowledge to construct the input prompt for the LLM, facilitating the generation of responses aligned with user expectations.

RAG underscores its necessity through various facets, primarily addressing three key objectives. Firstly, it mitigates the phenomenon of hallucination within LLMs through in-context learning, thereby mitigating instances of erroneous responses. Secondly, it facilitates seamless real-time information updates by leveraging external search engines for dynamic data updates. Lastly, it alleviates the financial burden associated with knowledge updates, offering a cost-effective alternative to traditional fine-tuning methods.

Beyond providing its proprietary LLM model, Upstage extends its services to encompass bespoke RAG systems tailored to client specifications. These systems integrate Upstage's cutting-edge search and query engines with customer-centric optimization technologies, witnessing substantial demand across diverse industries. Notably, Upstage has developed a sophisticated search engine capable of delivering contextually relevant responses to users' natural language queries based on real-time news articles, exemplifying the efficacy of RAG implementations.

Challenge

In conventional news searches, typically reliant on keyword-based queries, accommodating users' natural language inquiries was impractical. While the advent of generative AI appeared promising in addressing this issue, the imperative of providing accurate information in news searches presented a formidable challenge due to the hallucination phenomenon inherent in LLMs. Moreover, the constant influx of numerous articles uploaded in real-time on news platforms necessitated the development of a service that surpassed the capabilities of conventional general-purpose LLMs.

To surmount these challenges, the Korea Press Foundation entrusted Upstage with the design of a novel news search service dubbed "BIG KINDS AI." Leveraging the RAG system, Upstage tackled this issue head-on. By capitalizing on the attributes of generative AI, which enables responses to natural language queries, Upstage engineered a significantly enhanced User Experience compared to traditional news search engines. This initiative led to a notable upsurge in article search traffic within the news platform. Through the deployment of a news-specialized generative AI solution, Upstage constructed a system capable of conducting real-time news-based searches and responses without succumbing to hallucination concerns.

Solution

Upstage developed the RAG system by utilizing approximately 82 million article datasets provided by the Korea Press Foundation. Subsequently, Upstage launched the BIG KINDS AI, integrating advanced functionalities such as natural language question-based search and QA, real-time data update support, and precision and stability suitable for deployment in public institutions. Additional features included time-limited search capabilities, source attribution, and diverse natural language query intent processing.

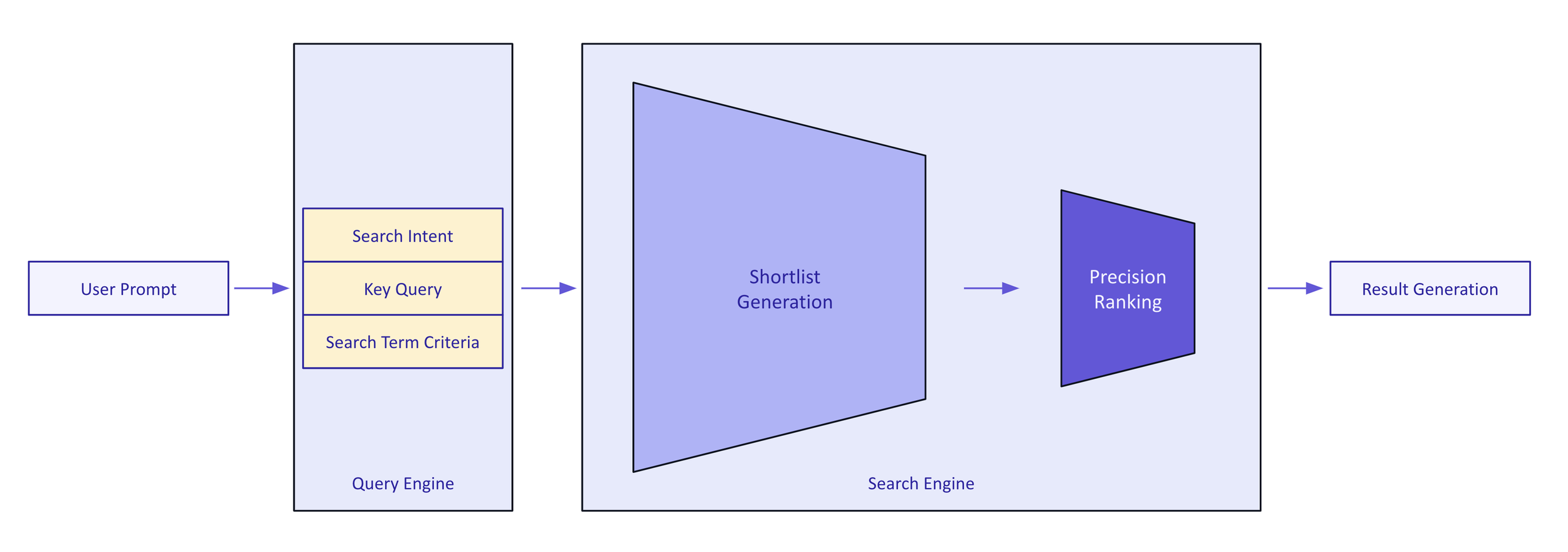

BIG KINDS AI efficiently managed large-scale datasets through 2-stage retrieval, ensuring optimal resource utilization and high-quality search results. Upon receiving user prompts, the system underwent initial processing via the query engine to discern search intent, analyze search parameters, and extract core queries. Subsequent stages involved candidate response generation and precision ranking to deliver relevant search results to users.

High-performance & quality query/search engine building process

Furthermore, specialized prompt engineering was tailored to meet the specific requirements of public institutions. Leveraging Upstage's extensive expertise in prompt engineering and optimization, the system was fine-tuned to align with client priorities, enhancing tone, and incorporating intent classification prompts, guardrail prompts, tone improvement prompts, and user prompts.

Following this, customized search and indexing algorithms were implemented to meet the unique characteristics of customer service. Integration with client systems facilitated seamless data pipeline connections and enabled real-time indexing according to client preferences. Additionally, tailored search algorithms, focusing on factors like recency or accuracy, were configured to generate optimized search outcomes.

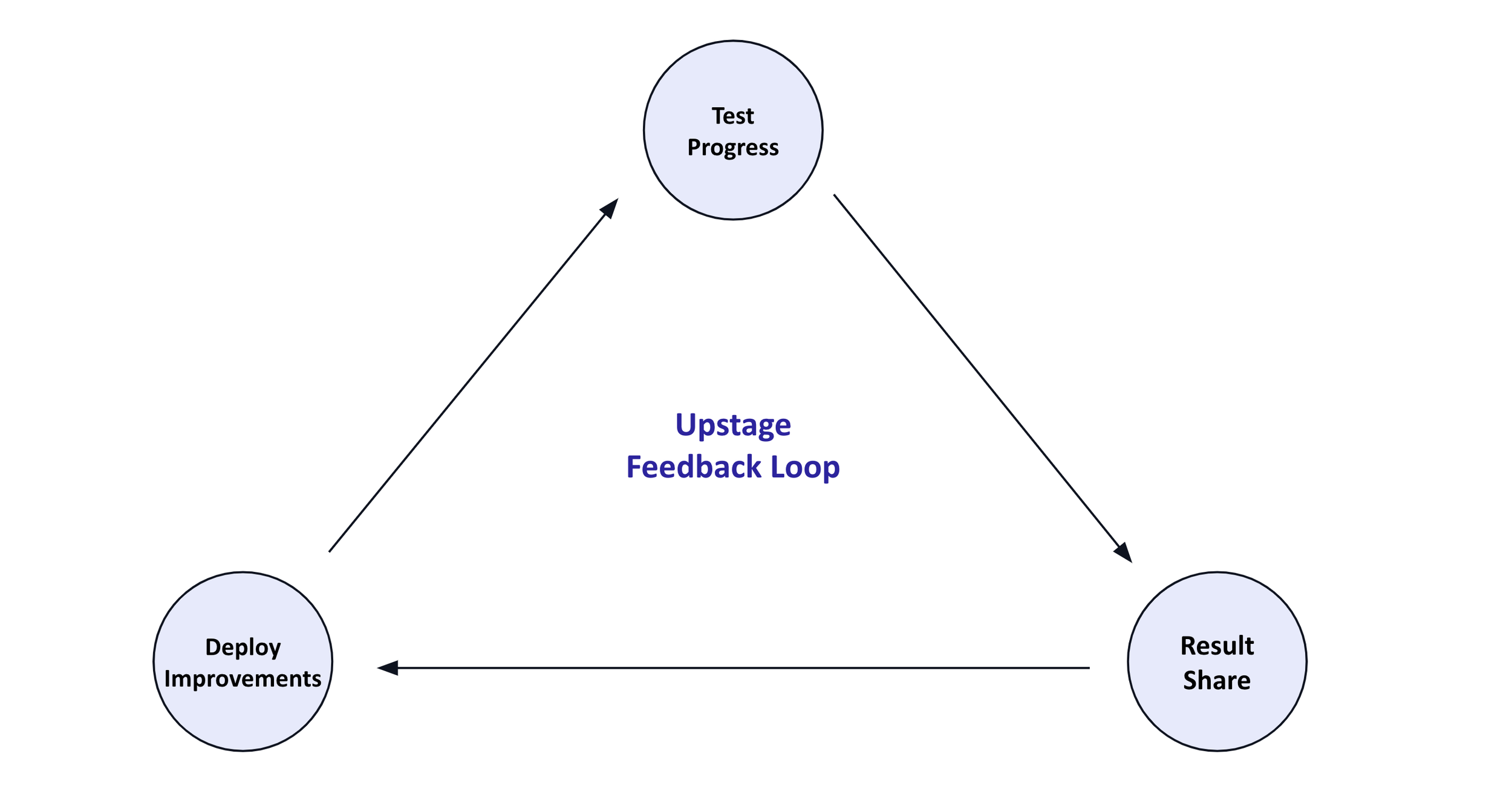

All services underwent rigorous testing through Upstage's iterative feedback loop. Evaluation processes involved simultaneous assessments by both client evaluation groups and Upstage's professional evaluation teams, with predefined criteria and agreement on evaluation factors. Upstage's professional evaluators meticulously crafted reliable evaluation datasets, ensuring unbiased testing outcomes. Subsequently, test results were shared with the development team to drive iterative improvements, leveraging a rapid feedback loop to facilitate stable and efficient deployment.

Upstage feedback loop

Leveraging Technology Partners

In collaboration with the Korea Press Foundation, Upstage harnessed AWS EC2 cloud computing resources and S3 data storage to establish the RAG system. This facilitated the dynamic indexing and vectorization of articles in line with clients' natural language query intentions.

EC2 computing power offers a versatile virtual computing environment, ideal for executing chatbots, deploying servers, handling backend operations, and more. Its robust computational capability streamlines the execution of intricate natural language processing tasks, while leveraging EC2 instances ensures swift real-time responses to user queries.

Leveraging S3 cloud storage also allows for the seamless storage and retrieval of extensive datasets, critical for training natural language processing models and effective model data management. By capitalizing on S3's dependable and rapid data repository, robust data security measures including access controls at both bucket and object levels are upheld, guaranteeing secure data governance.

Results and Benefits

BIG KINDS AI has been announced with a final quality evaluation score of 86 points, surpassing the client's benchmark of 80 points. This achievement not only underscores Upstage's commitment to rigorous quality management but also serves as validation of customer satisfaction, evident in the client's average score of 92.2 points, which exceeds Upstage's own average of 82.5 points. Additionally, internal evaluations conducted by Upstage revealed a notable performance superiority compared to generic services offered by other providers lacking specialization in customer data.

Customer & in-house project evaluation results

In collaboration with the Korea Press Foundation, Upstage successfully engineered a customer-centric RAG system. Utilizing high-performance search and query engines, Upstage enhanced RAG's pre-response capabilities and optimized search algorithms to align with the unique requirements of customer service. Employing prompt engineering techniques, Upstage further refined service performance quality, introducing various features to bolster system reliability and establishing a dedicated evaluation system to instill customer confidence.

Moreover, by introducing a customized reasoning pipeline tailored to specific use cases, Upstage has demonstrated its technical adeptness. This approach, diverging from conventional RAG methodologies, allows for the customization of pivotal components such as embedding models, re-ranking modules, and search and conversation engines, offering unparalleled flexibility and performance optimization capabilities.