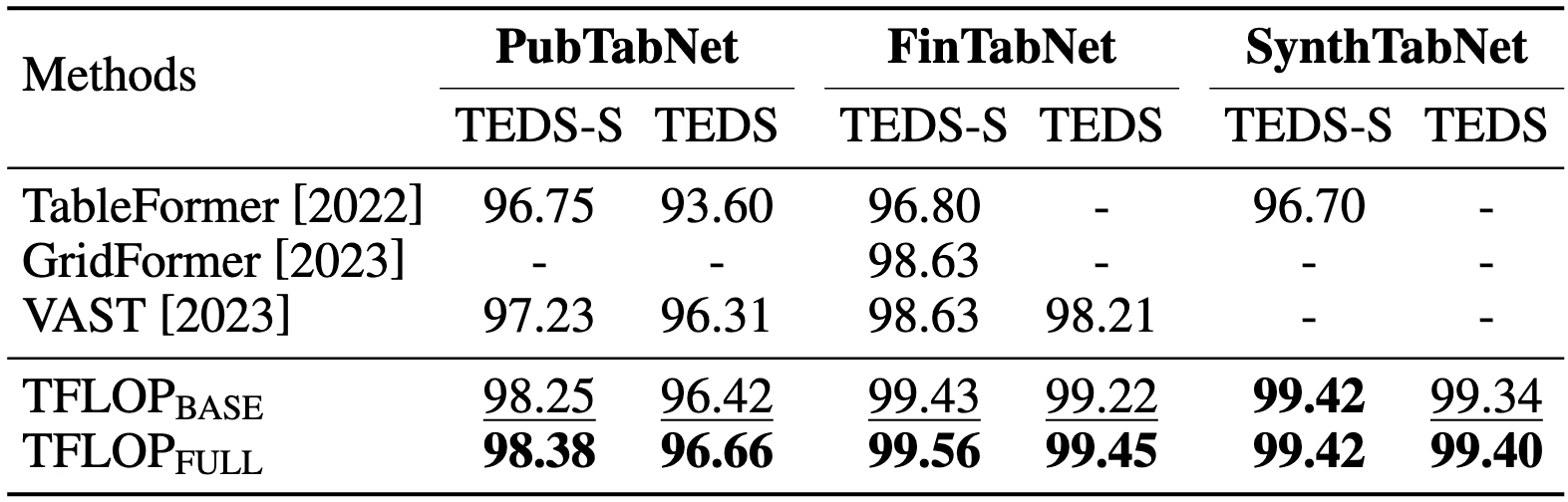

Tables are everywhere—spreadsheets, invoices, reports, and research papers. Yet, accurately understanding their structure remains a challenge. That’s where Upstage’s Document Parse comes in. Powered by advanced table structure recognition (TSR) technology, it converts complex tables into machine-readable formats with high precision, making table data easily accessible for automation and analysis.

What is TSR?

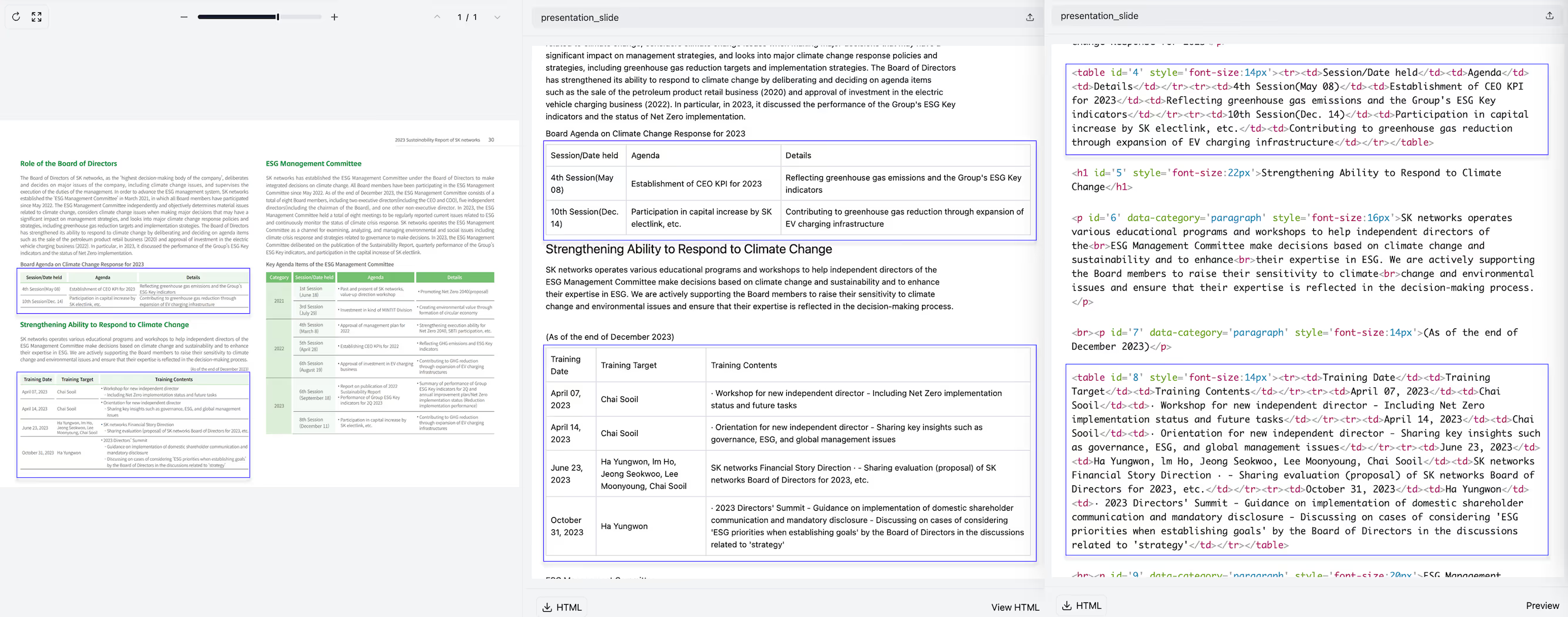

Table structure recognition (TSR) is the process of converting tabular images into structured text, such as HTML. It’s a crucial part of document intelligence, helping businesses automate data extraction from invoices, research papers, financial reports, and more.

However, tables come in all shapes and sizes—some have merged cells, irregular layouts, or missing borders—making them difficult to process accurately. A robust TSR system must navigate these complexities to correctly capture the logical structure of tables while preserving their relationships and hierarchies.

Introducing TFLOP: A smarter approach to table recognition

We’re excited to introduce TFLOP (Table Structure Recognition Framework with Layout Pointer Mechanism)—a research breakthrough from Upstage AI that sets a new standard in table structure recognition.

TFLOP takes a different approach compared to traditional TSR models. Most existing methods try to generate both HTML tags and bounding-box coordinates for table cells, which then get matched with OCR results. But this approach can lead to misalignment errors and requires complex post-processing to correct mistakes.

TFLOP solves this by introducing the following mechanisms:

1. Layout pointer mechanism

- Instead of relying on bounding-box detection, TFLOP directly maps table elements to OCR text regions using a pointer-based approach.

- This method eliminates alignment errors and removes the need for extra post-processing, making table extraction much more reliable.

2. Span-aware contrastive supervision

- Tables often contain complex structures, where cells can span multiple rows or columns.

- TFLOP improves accuracy by using span-aware contrastive supervision, a machine learning technique that adjusts model supervision based on the size and position of each table cell.

- This ensures better context understanding and helps the model capture relationships between data points more precisely.

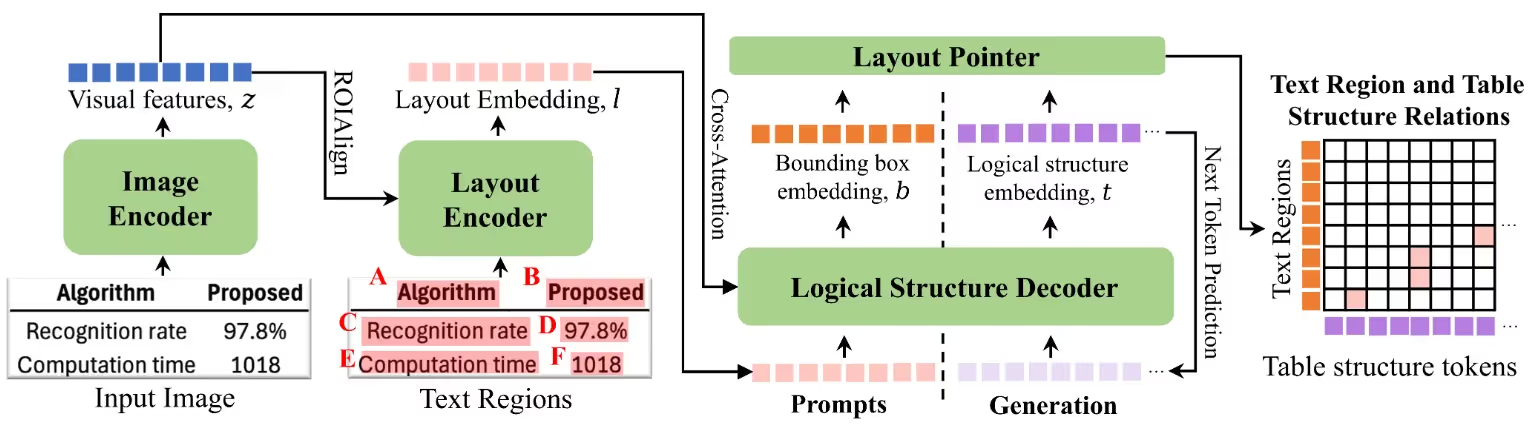

How TFLOP stacks up against other methods

Compared to previous methods, TFLOP achieves the highest accuracy on major TSR benchmarks, including PubTabNet, FinTabNet, and SynthTabNet, demonstrating its superior ability to process real-world table structures.

- TFLOPBase introduces the layout pointer mechanism, already outperforming existing models.

- TFLOPFull adds span-aware contrastive supervision and image ROI alignment, pushing the accuracy even further.

The result? More accurate and reliable table structure recognition, ready for applications across industries—from finance and insurance to academic research and enterprise automation.

Find out more

Want to dive deeper into TFLOP’s research and implementation? Check out:

You can also experience TFLOP in action through Upstage’s Document Parse, our enterprise-grade document processing model:

Thank you for reading! We hope you find TFLOP useful in your own projects and look forward to your feedback.

.avif)

.avif)

![[Prompt Engineering - Part 2] The essence of prompt engineering: A comprehensive guide to maximizing LLM usage](https://cdn.prod.website-files.com/6743d5190bb2b52f38e99ecd/67477e933aa3fe75e8cebdfe_Prompt%2BEngineering%2BGuide%2B(1).avif)