Top 5 Open-Source LLMs to watch out for in 2024

2024/01/12 | Written By: Sungmin Park (Content Manager)Benefits of Using Open-Source LLMs

The AI market is changing with the emergence of various open-source efforts due to the growing interest in generative AI. More than 10 large language models (LLMs) are scheduled for release this year. Open-source LLMs have drawn attention due to their accessibility, transparency, and cost-effectiveness. Enterprises can customize them to align with their specific needs because of their transparency. Fine-tuning open-source projects can quickly develop new models without the need for training massive amounts of data or developing their own systems. This has led to a growing chorus of voices in the industry calling for a healthy open source ecosystem for the advancement of AI technology. Let's explore the benefits of using open-source LLMs in the paragraph below.

Innovation and Development

Collaborative Contributions: Encourages technological progress through community collaboration.

Rapid Iterations: Community-driven enhancements and bug fixes lead to swift improvements.

Accessibility and Customization

Cost-Free Access: Eliminates financial barriers to technology adoption.

Flexibility for Customization: Offers the ability to modify and extend the software to meet diverse needs.

Educational and Research Opportunities

Learning Platform: Provides resources for students and researchers to learn and experiment.

Transparency: Facilitates understanding and research of algorithms and data processing methods.

Economic Benefits

Cost Reduction: Decreases development costs and potentially increases Return on Investment (ROI).

Entrepreneurship and Innovation: Enables startups and businesses to develop new products and services at a lower cost.

Technical Reliability

Enhanced Security: Publicly available source code can lead to stronger security and increased trust.

Sustainable Support: Offers the potential for long-term support and maintenance by the open-source community.

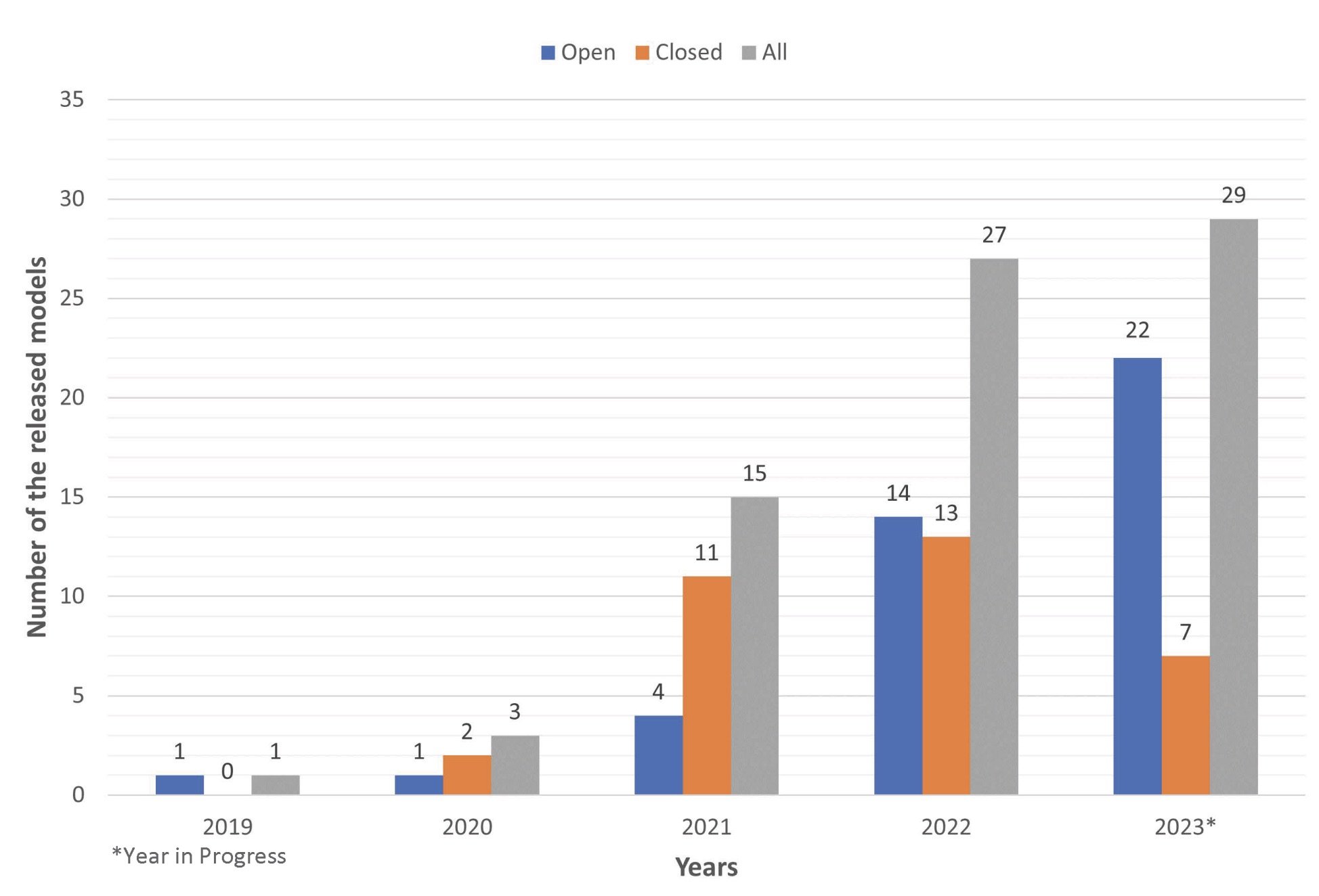

The trends in the number of LLM models introduced over the years. (Source: A Comprehensive Overview of Large Language Models)

The open source LLM craze began in February 2023, when Meta made LLaMa available to academia, and since then a number of 'sLLMs' (small Large Language Models) have emerged using it. sLLMs typically have between 6 billion (6B) and 10 billion (10B) parameters, and their main advantage is that they can achieve low-cost, high-efficiency effects at a much smaller size, considering that OpenAI's "GPT-4" parameters are estimated to be around 1.7 trillion.

So what are the 5 best open source LLMs to watch out for in 2024?

5 Best Open-Source LLMs for 2024

1. Llama 2

Source: Meta AI

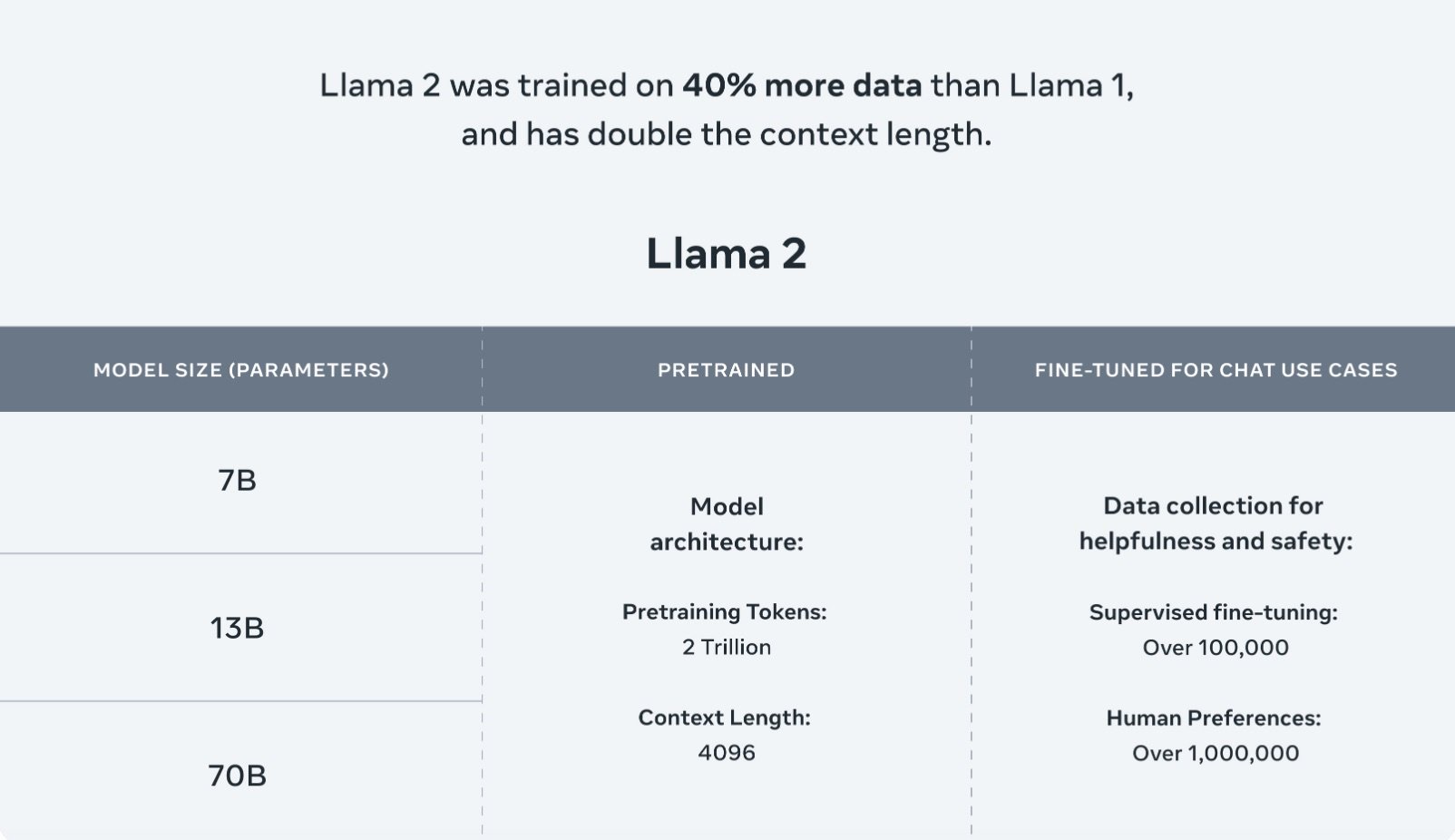

Llama 2 is an open source LLM developed by Meta AI, which is one of the most popular open source LLMs. Llama 2 is the first commercially available version of Llama and was released on July 18, 2023. It offers four sizes ranging from 7B to 70B, the pre-training data for Llama 2 consists of 2 trillion tokens, larger than Llama 1.

Utilizing a standard transformer architecture, Llama 2 applies new features such as RMSNorm(Root mean square layer normalization) and RoPE(Rotary Positional Embedding). Llama-2-chat starts with supervised fine-tuning and is refined by RLHF(Reinforcement learning from human feedback). It uses the same tokenizer as Llama 1, the Byte Pair Encoding(BPE) algorithm with SentencePiece. Additionally, significant attention is paid to safety checks, addressing concerns regarding truth, toxicity, and bias, which are critical issues. It is useful for a wide variety of projects, as it has been extended to allow for fine-tuning on a variety of platforms, including Azure and Windows.

Llama-2-Chat optimized for two-way conversations with reinforcement learning with human feedback (RLHF) and reward modeling. (Source: Meta AI)

2. Mistral

Mistral-7B is the foundational model released by Mistral AI, which is based on customized training, tuning, and data processing methods. It’s an open source model available under the Apache 2.0 License. It's designed for real-world apps, offering efficiency and high performance. Compared to one of the best open source 13B models (Llama 2), Mistral 7B outperformed in all evaluated benchmarks upon release. It's a standout performer across various benchmarks which include math, code generation and reasoning.

Performance of Mistral 7B and different Llama models on a wide range of benchmarks. (Source: Mistral 7B)

For large batch processing tasks without complex calculations, the Mistral-Tiny model, including the Mistral-7B, is the right choice. It is the most cost-effective endpoint for applications. Mistral-small (Mistral 8x7B Instruct v0.1) supports five languages (English, French, Italian, German and Spanish) and excels in code generation. Mistral-medium is known for using a high-performance prototyping model that outperforms GPT-3.5. It is suitable for high quality applications.

3. Solar

Solar is a pre-trained LLM released by Upstage. SOLAR 10.7B, an LLM with 10.7 billion parameters, is the latest and best open source LLM that outperforms existing models such as Llama 2 and Mistral-7B in essential NLP tasks while maintaining computational efficiency. In December 2023, it achieved the top position in the "Open LLM Leaderboard" run by Hugging Face, the world's largest machine learning platform. This accomplishment holds significant value as Solar is recognized as the world's best-performing model with fewer than 30 billion parameters (30B), meeting the standard for Small LLM (SLM).

To optimize the performance of the smaller Solar models, Upstage utilized its Depth Up-Scaling method, combining the advantages of larger 13B models with good performance and smaller 7B models with intellectual limitations. In contrast to mixture-of-experts (MoE), Depth Up-Scaling does not require complex changes to train and inference. The base model of Solar is a 32-layer Llama 2 architecture with pre-trained weights from Mistral 7B. Solar leverages it by tapping into a vast pool of community resources while introducing novel modifications to enhance its capabilities.

Also, it relied on its own built data instead of the leaderboard benchmarking dataset during pre-learning and fine-tuning stages. This emphasizes Solar's versatility for various real-world tasks in business applications, unlike models that boost leaderboard scores by directly applying benchmark sets.

Evaluation results for SOLAR 10.7B and SOLAR 10.7B-Instruct along with other top-performing models. (Source: SOLAR 10.7B: Scaling Large Language Models with Simple yet Effective Depth Up-Scaling)

4. Yi

Yi-34B was developed by Chinese startup 01.AI, which reached unicorn status in less than eight months with a valuation of more than $1 billion. The Yi series is targeted as a bilingual language model, trained on a high-quality 3T multilingual corpus, and has shown promise in language comprehension, common sense reasoning, reading comprehension, and more. It offers 6B and 34B size of models and can be extended to 32K during inference time.

According to Yi's GitHub, the 6B series models are suitable for personal and academic use, while the 34B series models are suitable for commercial and personal, academic use. The Yi series models use the same model architecture as LLaMA, allowing users to take advantage of LLaMA's ecosystem. 01.AI plans to release an improved model this year and expand its commercial product to compete with the leading generative AI market.

5. Falcon

Source: Technology Innovation Institute

Falcon is a generative large language model released by the Technology Innovation Institute in the United Arab Emirates (UAE). It offers 180B, 40B, 7.5B, 1.3B parameter AI models. Falcon 40B is a revolutionary AI model that is available royalty-free to both researchers and commercial users. It works well with 11 languages and can be fine-tuned for specific needs. Falcon 40B uses less training compute than GPT-3 and Chinchilla AI and focuses on quality training data. The 180B model has outstanding performance, with 180 billion parameters and trained on 3.5 trillion tokens.

Accelerating the Future of AI with Open Source LLMs

In conclusion, the potential for growth in open source LLM is significant as it can stimulate the broader growth of the AI market. LLM is interconnected with various areas of AI, enabling the birth of new ideas and technologies. Also the open-source ecosystem of LLM will accelerate its growth. Open source provides an ecosystem that allows anyone to participate, improve, and learn, creating new opportunities for all. We should anticipate these changes, monitor its growth, and strive to grow alongside it.