What makes an LLM enterprise-ready? By building with enterprises—not just building for them—we identified their critical needs: (1) the ability to handle complex documents, (2) superior language and domain expertise that outpaces other providers, and (3) guaranteed cost-efficiency to deliver high-impact results within budget constraints.

Today, we’re thrilled to introduce Solar Pro — the official release and a significant leap forward from Solar Pro Preview. Here’s why leading organizations are already paying attention:

- Best-in-class structured text understanding: Solar Pro outshines competitors in understanding structured text, such as HTML or Markdown.

- East Asian language mastery: With an up to 64% improvement in Korean and Japanese benchmarks compared to Solar Pro Preview and consistent excellence in English.

- Built for high-stakes industries: From finance to healthcare to the legal domains, Solar Pro achieves the highest performance among the models running on a single GPU.

- Ready for high-complexity use cases: Solar Pro now supports structured outputs, enabling seamless integration with enterprise systems. With up to 32k tokens of context, it also handles large-scale data and complex tasks effortlessly.

Excels in structured text understanding to handle complex enterprise data

Enterprise data comes in various forms, including complex documents, tabular data, JSON, and graphical data. Many of these, particularly documents, can be transformed into markup formats like HTML or Markdown using tools such as Document Parse. This makes processing and understanding structured text a critical skill for LLMs—a capability we call structured text understanding.

To demonstrate Solar Pro's expertise in this area, especially its ability to understand HTML and Markdown, we evaluated its performance using benchmarks that represent common enterprise documents:

- Document QA: DocVQA-html and MP-DocVQA-html, where original documents from DocVQA and MP-DocVQA are converted to HTML using Document Parse

- Table QA: WikiTableQuestions

Experimental results show that Solar Pro demonstrated a significant performance advantage over other leading models across four benchmarks. In the text-based LLM setting described above, our model exhibited superior performance on the DocVQA-html and MP-DocVQA-html benchmarks compared to the other models. It outperformed not only models that run on a single GPU but also larger models like LLaMA 70B, which requires multi-GPU setups, and models such as GPT-4o mini as well. The same trend was observed in the Table QA task with structured text inputs in HTML and Markdown formats. These results demonstrate Solar Pro’s strong capability in handling structured text formats commonly used in enterprise applications.

Multilingual understanding and instruction following

Besides excelling in specific use cases, Solar Pro offers exceptional proficiency in enterprise customers’ preferred languages. While English-speaking customers were already impressed with the performance of Solar Mini and Solar Pro Preview, many users in Korea and Japan expressed the need for stronger capabilities in their languages. We listened—and delivered them with Solar Pro.

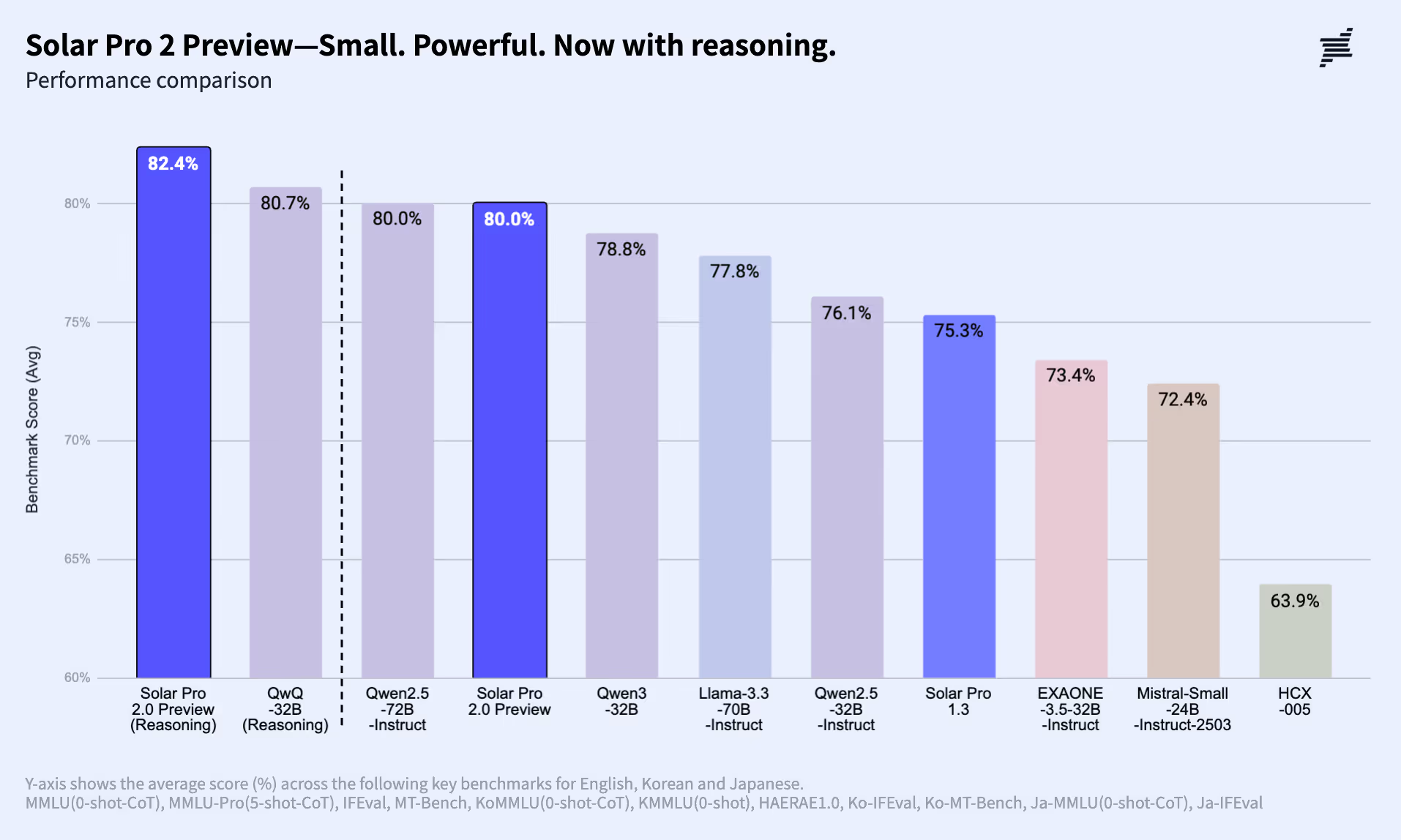

Solar Pro provides a substantial leap in performance while maintaining the best performance among single-GPU models. Key highlights include:

- On the IFEval benchmark, a trusted measure of instruction-following capabilities, Solar Pro outperformed all the models designed for single GPUs across all three target languages.

- For Korean language, Solar Pro demonstrates exceptional performance in benchmarks like MMLU, KMMLU, HAERAE and Ko-IFEval, where Ko-IFEval is the translated version of IFEval. Across these four benchmark tests, it shows a performance gap ranging from at least 11% to as much as 98% compared to competing models. These results establish Solar Pro as a clear leader in Korean language proficiency.

Strong domain intelligence for finance, healthcare, and legal

Solar Pro demonstrates exceptional capabilities in three critical domains: finance, healthcare, and legal. For domain-specific evaluations, we utilized comprehensive metrics tailored to real-world applications:

- MMLUs: Combines domain-specific fields from MMLU and MMLU-Pro to assess English-language performance.

- Ko-MMLUs: Aggregates relevant fields from MMLU and KMMLU for a robust evaluation of Korean-language performance.

- Domain-specific benchmarks: Includes specialized metrics like KorMedMCQA, KBL, and Ko-Finance-Bench, an internal benchmark developed by Upstage along with an enterprise customer.

As seen in experimental results, our model achieves top performance in both English and Korean across three key domains, surpassing other single-GPU-compatible models. These accomplishments highlight its ability to meet the high standards of domain-specific expertise in both English and Korean, making it a versatile solution for enterprise clients across diverse industries.

Ready for high-complexity use cases

In addition, Solar Pro is enhanced to address enterprise challenges more effectively with two key features:

- 32k token context length: Additionally, the expanded 32k token context length allows Solar Pro to process and analyze larger contexts in a single pass. This capability is ideal for complex tasks, such as reviewing lengthy contracts, analyzing multi-page reports, or implementing RAG pipelines.

- Structured outputs: Solar Pro can generate machine-readable formats like JSON while strictly adhering to user-defined schemas. This ensures seamless integration with enterprise workflows and eliminates the need for manual corrections. In internal benchmarks, Solar Pro achieved an impeccable 100% schema compliance rate, a significant leap from the 84.76% compliance of Solar Mini.

Getting started with Solar Pro

Integrating Solar Pro into your operations is straightforward, with multiple deployment options to suit your needs:

- VPC deployment with AWS: Deploy Solar Pro on your AWS infrastructure via the newly released AWS Bedrock Marketplace or AWS Marketplace. To save costs, consider using the quantized version of Solar Pro which shows a performance degradation of less than 0.5%. The quantized Solar Pro requires two NVIDIA A10G GPUs, so an AWS

ml.g5.12xlargeinstance, which includes four A10G GPUs, can support two Solar Pro deployments simultaneously. - API from Upstage Console: If setting up your own infrastructure isn't preferable, access Solar Pro through the Upstage Console. This platform provides a user-friendly interface to interact with Solar Pro's capabilities, offering a pay-as-you-go model for flexibility.

- On-Premises deployment: For organizations with specific security or compliance requirements, deploying Solar Pro on-premises is an option. Please contact our sales team to discuss what’s possible to meet your enterprise needs.

Towards building the ideal LLM for the enterprise

Solar Pro marks a milestone in enterprise AI, offering exceptional capabilities in structured text understanding, multilingual proficiency, and domain expertise—all while maintaining the efficiency of a single GPU. From automating workflows to analyzing complex documents and delivering precision in high-stakes industries like finance, healthcare, and legal, Solar Pro is a trusted partner for enterprises embracing AI-driven transformation.

This is just the beginning. By continuing to listen to our customers and refine our solutions, we are committed to shaping the ideal LLM for enterprise needs. Explore what Solar Pro can do for your business—get started today and unlock new possibilities.

Want to learn more?

- Contact our sales team

- Quickly test Solar Pro in our Playground

- Add questions or make suggestions for Solar Pro

.avif)