We don’t have a technology problem

Everyone in insurance is talking about AI. Pilot programs. Proof of concepts. Partnerships. But beneath the noise sits an inconvenient truth: it’s not the technology that’s slowing the industry down.

It’s the lack of trust.

Let’s be honest: insurance has never suffered from a shortage of technology. There are tools that can parse PDFs, classify ACORDs, read schedules, and even predict loss trends with near-surgical accuracy.

Carriers, MGAs, and brokers hesitate to scale AI because they can’t see how those insights are made. The model gives an answer, but not the reasoning. The data flows through dozens of invisible layers, and by the time a quote appears, no one can say which field or file informed it.

That's an assumption, not intelligence.

And in an industry built on proof, assumption is the fastest way to lose trust.

Capability doesn’t equal confidence

Insurance is built on a paper trail. Every number, every note, every revision must tie back to something verifiable. That’s what keeps regulators, reinsurers, and policyholders confident that risk is being managed responsibly.

But many AI systems skip that step entirely. They promise efficiency and accuracy without offering explainability, which is a huge red flag for any underwriter who’s ever had to defend a decision in front of compliance or claims.

You can’t audit what you can’t see. You can’t explain what you don’t understand. And you can’t trust what you can’t trace.

Adoption lags not because of resistance to technology, but because of resistance to uncertainty.

Regulation is catching up

Trust isn’t just a good idea anymore. It’s the law.

In the last couple of years, two major regulatory frameworks have redrawn the lines for how insurers can use AI:

- The NAIC Model Bulletin on AI Use (2024) makes it clear: insurers must maintain accountability, transparency, and explainability for any AI system influencing decisions. That means documenting data sources, reviewing for bias, and proving that no algorithm is introducing unfair outcomes.

- The EU AI Act (2024) goes even further, labeling insurance underwriting and risk assessment as “high-risk” AI applications. Underwriters must maintain human oversight, auditable decision logs, and a transparent record of how every model behaves.

In simple terms, every automated action in underwriting, from data extraction to pricing recommendations, must now be explainable and defensible.

Regulators are saying what underwriters have known all along. When you can’t explain your decision, you lose credibility.

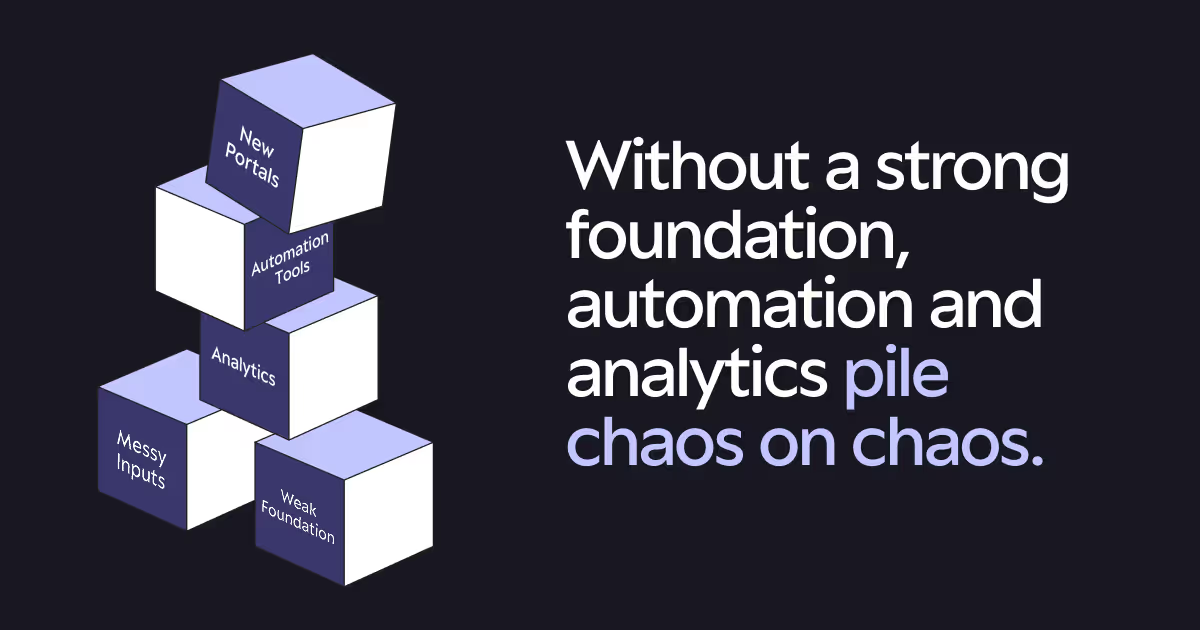

The danger of black-box AI

The market is full of “AI underwriting assistants” that claim to speed up submissions and streamline workflows. The problem is that when these systems act like black boxes, the output can’t be validated.

And that’s where real risk begins.

- A single incorrect class code can distort a portfolio’s entire loss ratio.

- A missing coverage detail can create exposure no one sees until a claim hits.

- A biased training dataset can quietly push entire segments of business out of appetite.

When AI can’t show its work, every decision becomes a liability. Black-box AI doesn’t just create errors. It creates doubt. And doubt spreads faster than innovation ever can.

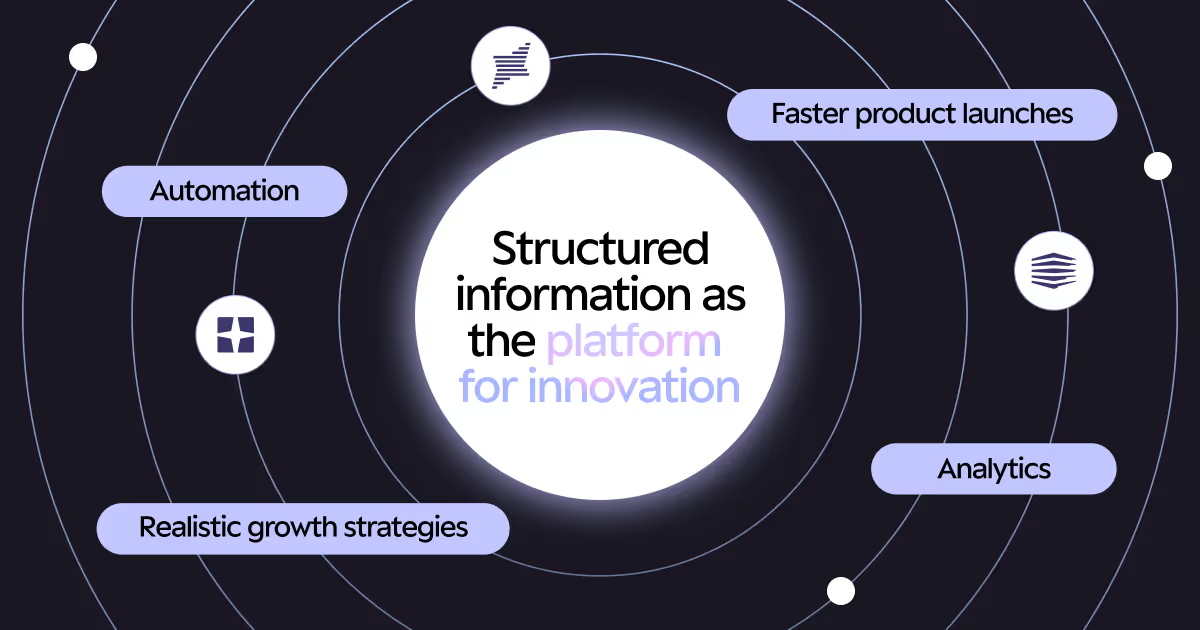

Trust starts at the data layer

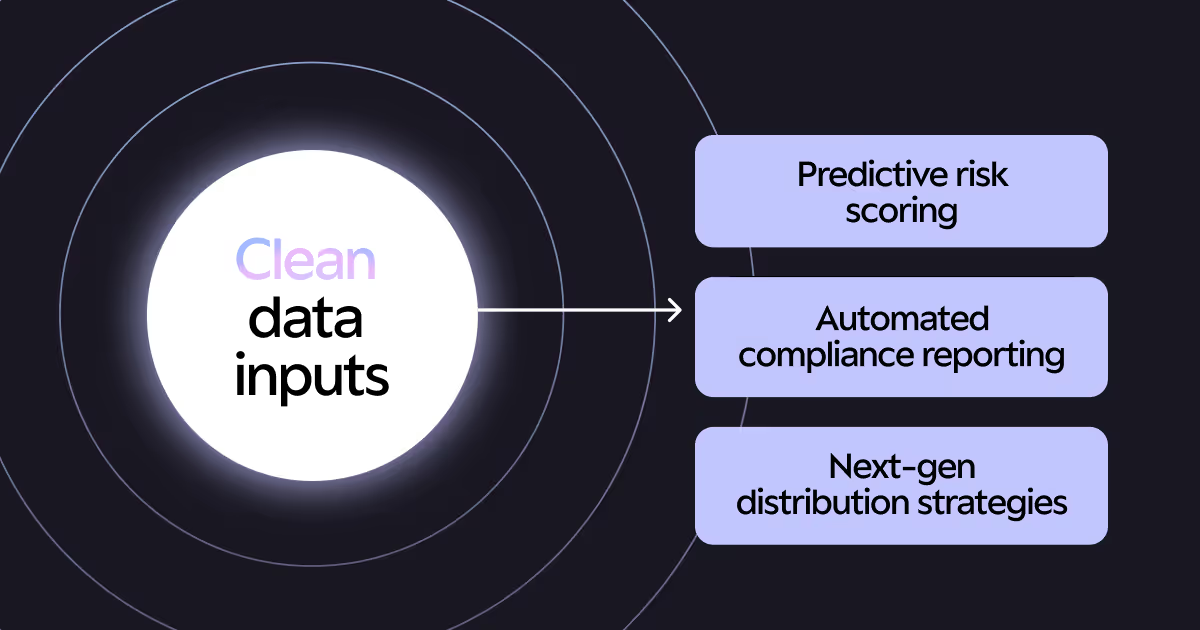

The only way to make AI trustworthy is to make its inputs reliable.

That starts at the submission layer: the messy, inconsistent, attachment-filled inbox where most underwriting workflows still begin.

If that foundation is weak, no model can fix it. You can’t build accuracy on top of chaos.

That’s where AI Space, Upstage’s proprietary AI platform developed over years of work with global insurers, changes the equation.

AI Space doesn’t just read and extract data. It builds trust into every step of the process:

- Traceability: Every data point is linked back to its source file and page.

- Transparency: Every extraction and classification can be reviewed and explained.

- Accountability: Every workflow is compliant with NAIC and EU AI Act standards.

The result is AI that doesn’t just work faster. It’s AI that works responsibly.

When you can see what your AI did, when it did it, and why, you stop worrying about whether to trust it. You just do.

Proof that trust pays off

Trust isn’t just philosophical. The difference between a competitive advantage and another abandoned experiment almost always comes down to whether people believe the system behind it.

Upstage clients report:

- 96% accuracy in manual data verification

- 40% faster submission turnaround

- 100% traceable audit trail across AI-assisted workflows

That’s not because they adopted AI earlier than everyone else. It’s because they adopted AI that they could understand and explain.

Underwriters stop second-guessing outputs. Compliance teams stop chasing documentation. And when brokers receive faster, cleaner quotes with fewer errors, they start sending more business back.

That’s where the flywheel begins to turn.

Each win compounds the next: brokers trust the process, underwriters see stronger hit ratios, and leaders recognize measurable growth without adding headcount. Trust drives efficiency. Efficiency builds loyalty. Loyalty fuels growth.

In the end, transparency accelerates the entire ecosystem.

AI that’s built to be believed

AI isn’t replacing underwriters. It’s replacing the friction that slows them down.

But for that to happen, the technology has to speak the same language as the industry: accuracy, traceability, accountability.

That’s why AI Space, powered by Upstage, was built from the ground up for insurance. It’s not AI for the sake of AI. It’s AI that earns its place within the art of underwriting.

Because the only thing more powerful than automation is trust in what’s being automated. When AI earns your trust, it earns its place in underwriting. And that’s where real transformation begins.

.avif)

.avif)

.avif)