Automating product price comparisons with a purpose-trained LLM

%201.svg)

About

ConnectWave

ConnectWave is a leading e-commerce platform in Korea with 18M+ MAU, specializing in price-comparison services that connect consumers with a wide range of products across diverse categories. Known for its comprehensive catalog and user-friendly interface, ConnectWave empowers consumers to make informed purchasing decisions by providing accurate and detailed product information.

Problem

General-purpose large language models (LLMs) struggle to meet the demands of specific industries, such as e-commerce, due to their reliance on public datasets and general training. ConnectWave faced significant inefficiencies as product listers manually extracted and organized detailed product information from catalogs for their price-comparison website, Danawa. This process was time-consuming and critical for maintaining accurate and comprehensive product listings, directly affecting user experience and operational efficiency.

Technical challenges

- Inadequate domain knowledge: General-purpose LLMs lacked the specialized understanding required for tasks like attribute-value extraction (AVE).

- Hallucination risks: Errors or ambiguous outputs could compromise the accuracy of product listings.

- Scalability and speed: High data volumes required an efficient model capable of handling extensive product catalogs quickly and accurately.

- Privacy concerns: Ensuring data security and protecting proprietary information was a critical requirement for ConnectWave.

Solution

Upstage developed a private e-commerce-specialized LLM tailored for ConnectWave. The model was fine-tuned specifically for attribute-value extraction and sentiment analysis, automating the extraction and categorization of product attributes while providing actionable insights from customer reviews.

The procedure of developing a purpose-trained LLM for ConnectWave consisted of three steps:

- Post-training with public data: Public datasets were used to enhance the base model's understanding of commerce-specific data.

- Post-training with private data: ConnectWave’s proprietary datasets were incorporated to create a private, domain-specific LLM.

- Task fine-tuning: Task-specific fine-tuning further optimized the model for ConnectWave’s needs.

Overview of creating a purpose-trained LLM

Mixture-of-experts

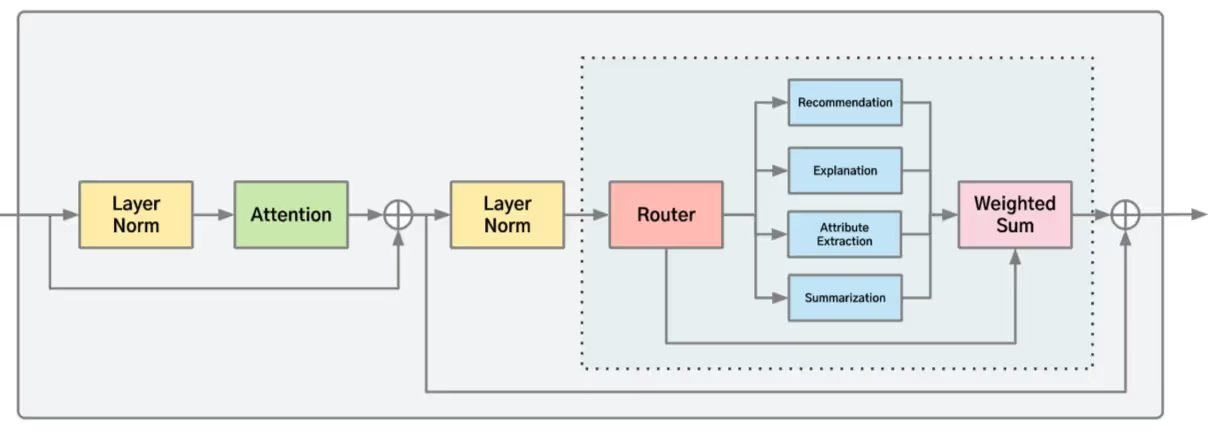

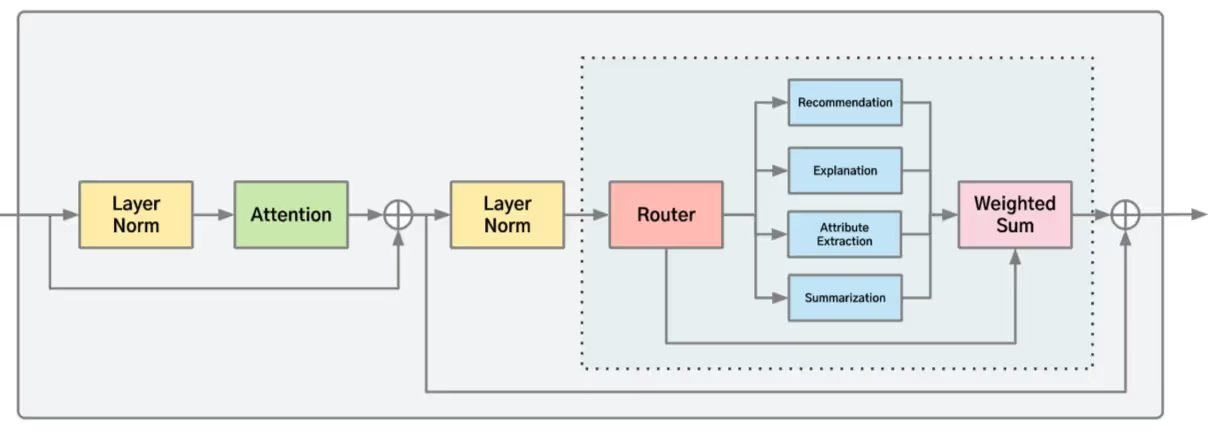

To incorporate domain-specific knowledge into the base LLM, Upstage employed the Mixture-of-experts (MoE) architecture. This involved expanding the MLP layer of the Transformer block into multiple layers (N layers) using a copy-and-paste approach. Additional components, such as a Router network and a Weighted Sum module, were integrated to manage the activation of these layers. Core structural elements like LayerNorm and Attention were retained from the base LLM and adjusted for more effective learning from new data.

The MoE structure enhances performance through the collaborative nature of its expert networks, where each MLP layer functions as a specialist for specific tasks or data types. This allows the model to deliver precise, context-aware responses for tasks like recommendation, explanation, attribute extraction, and summarization, improving overall customer service effectiveness.

An additional advantage of the MoE architecture is its optimized serving efficiency. By activating only the required experts for each token, it creates a sparse structure that significantly boosts inference speed compared to similarly sized models. This efficiency enables Upstage to deliver a model that is not only faster but also finely tuned to customer-specific needs, outperforming generic models in both speed and accuracy.

Leveraging technology partners

Leveraging AWS SageMaker for continual post-training played a pivotal role in Upstage's project success. SageMaker enabled efficient processing of extensive datasets within tight project timelines, significantly enhancing productivity and ensuring smooth model development.

SageMaker's distributed training capabilities allowed large-scale datasets to be processed in parallel across multiple computational resources, drastically reducing training time. Additionally, its seamless scalability ensured effortless adaptation to growing data volumes and increased throughput demands. This combination of efficiency and scalability provided Upstage with a robust and flexible solution for managing intensive post-training requirements.

Results

The customized LLM significantly reduced manual workloads for ConnectWave’s product listers, automating data extraction and categorization tasks with high accuracy. It outperformed GPT-3.5 on the AE-110k benchmark and enhanced the standardization of product metadata. The solution improved the shopping experience for users, increased productivity for ConnectWave, and demonstrated the transformative potential of domain-specific private LLMs in e-commerce.

Business impact

Why Upstage

About

ConnectWave

ConnectWave is a leading e-commerce platform in Korea with 18M+ MAU, specializing in price-comparison services that connect consumers with a wide range of products across diverse categories. Known for its comprehensive catalog and user-friendly interface, ConnectWave empowers consumers to make informed purchasing decisions by providing accurate and detailed product information.

Problem

General-purpose large language models (LLMs) struggle to meet the demands of specific industries, such as e-commerce, due to their reliance on public datasets and general training. ConnectWave faced significant inefficiencies as product listers manually extracted and organized detailed product information from catalogs for their price-comparison website, Danawa. This process was time-consuming and critical for maintaining accurate and comprehensive product listings, directly affecting user experience and operational efficiency.

Technical challenges

- Inadequate domain knowledge: General-purpose LLMs lacked the specialized understanding required for tasks like attribute-value extraction (AVE).

- Hallucination risks: Errors or ambiguous outputs could compromise the accuracy of product listings.

- Scalability and speed: High data volumes required an efficient model capable of handling extensive product catalogs quickly and accurately.

- Privacy concerns: Ensuring data security and protecting proprietary information was a critical requirement for ConnectWave.

Solution

Upstage developed a private e-commerce-specialized LLM tailored for ConnectWave. The model was fine-tuned specifically for attribute-value extraction and sentiment analysis, automating the extraction and categorization of product attributes while providing actionable insights from customer reviews.

The procedure of developing a purpose-trained LLM for ConnectWave consisted of three steps:

- Post-training with public data: Public datasets were used to enhance the base model's understanding of commerce-specific data.

- Post-training with private data: ConnectWave’s proprietary datasets were incorporated to create a private, domain-specific LLM.

- Task fine-tuning: Task-specific fine-tuning further optimized the model for ConnectWave’s needs.

Overview of creating a purpose-trained LLM

Mixture-of-experts

To incorporate domain-specific knowledge into the base LLM, Upstage employed the Mixture-of-experts (MoE) architecture. This involved expanding the MLP layer of the Transformer block into multiple layers (N layers) using a copy-and-paste approach. Additional components, such as a Router network and a Weighted Sum module, were integrated to manage the activation of these layers. Core structural elements like LayerNorm and Attention were retained from the base LLM and adjusted for more effective learning from new data.

The MoE structure enhances performance through the collaborative nature of its expert networks, where each MLP layer functions as a specialist for specific tasks or data types. This allows the model to deliver precise, context-aware responses for tasks like recommendation, explanation, attribute extraction, and summarization, improving overall customer service effectiveness.

An additional advantage of the MoE architecture is its optimized serving efficiency. By activating only the required experts for each token, it creates a sparse structure that significantly boosts inference speed compared to similarly sized models. This efficiency enables Upstage to deliver a model that is not only faster but also finely tuned to customer-specific needs, outperforming generic models in both speed and accuracy.

Leveraging technology partners

Leveraging AWS SageMaker for continual post-training played a pivotal role in Upstage's project success. SageMaker enabled efficient processing of extensive datasets within tight project timelines, significantly enhancing productivity and ensuring smooth model development.

SageMaker's distributed training capabilities allowed large-scale datasets to be processed in parallel across multiple computational resources, drastically reducing training time. Additionally, its seamless scalability ensured effortless adaptation to growing data volumes and increased throughput demands. This combination of efficiency and scalability provided Upstage with a robust and flexible solution for managing intensive post-training requirements.

Results

The customized LLM significantly reduced manual workloads for ConnectWave’s product listers, automating data extraction and categorization tasks with high accuracy. It outperformed GPT-3.5 on the AE-110k benchmark and enhanced the standardization of product metadata. The solution improved the shopping experience for users, increased productivity for ConnectWave, and demonstrated the transformative potential of domain-specific private LLMs in e-commerce.

Business impact

Why Upstage

Explore more success stories

Explore how industry leaders have achieved success and transformed their workflows with Upstage.

How Chosun Ilbo Built a Scalable English News Translation Pipeline with Solar Pro

.png)

How Verra is Streamlining Data Management with Upstage AI

Automating product price comparisons with a purpose-trained LLM

Upstage collaborated with ConnectWave to develop a domain-specific large language model (LLM) tailored for e-commerce tasks, addressing inefficiencies in manual product data extraction and organization. The customized LLM, built using Upstage’s advanced mixture-of-experts architecture, automated the extraction of attribute-value pairs and streamlined product catalog management. By significantly reducing manual workloads and improving data accuracy, the solution enhanced operational efficiency and user experience. Outperforming general-purpose LLMs like GPT-3.5, Upstage’s tailored model demonstrated the transformative potential of private LLMs in revolutionizing e-commerce operations.

Building tomorrow’s solutions today

Talk to AI expert to find the best solution for your business.